A Gentle Introduction to Particle Swarm Optimization

- Get link

- X

- Other Apps

Last Updated on October 12, 2023

Particle swarm optimization (PSO) is among the many bio-inspired algorithms and it is a simple one to hunt for an optimum decision throughout the decision space. It is completely totally different from totally different optimization algorithms in such a way that solely the goal function is required and it isn’t relying on the gradient or any differential sort of the goal. It moreover has only some hyperparameters.

In this tutorial, you may research the rationale of PSO and its algorithm with an occasion. After competing this tutorial, you may know:

- What is a particle swarm and their conduct beneath the PSO algorithm

- What type of optimization points shall be solved by PSO

- How to resolve a difficulty using particle swarm optimization

- What are the variations of the PSO algorithm

Kick-start your enterprise with my new e ebook Optimization for Machine Learning, along with step-by-step tutorials and the Python provide code data for all examples.

Let’s get started.

Particle Swarms

Particle Swarm Optimization was proposed by Kennedy and Eberhart in 1995. As talked about throughout the distinctive paper, sociobiologists think about a school of fish or a flock of birds that strikes in a gaggle “can profit from the experience of all other members”. In totally different phrases, whereas a hen flying and searching randomly for meals, for instance, all birds throughout the flock can share their discovery and help your whole flock get among the finest hunt.

Particle Swarm Optimizsation.

Photo by Don DeBold, some rights reserved.

While we’re capable of simulate the movement of a flock of birds, we’re capable of moreover take into consideration each hen is to help us uncover the optimum decision in a high-dimensional decision space and among the finest decision found by the flock is without doubt one of the finest decision throughout the space. This is a heuristic decision on account of we’re capable of certainly not present the precise world optimum decision shall be found and it is usually not. However, we ceaselessly uncover that the reply found by PSO is sort of close to the worldwide optimum.

Example Optimization Problem

PSO is best used to hunt out the utmost or minimal of a function outlined on a multidimensional vector space. Assume now we now have a function $f(X)$ that produces an precise price from a vector parameter $X$ (akin to coordinate $(x,y)$ in a airplane) and $X$ can deal with practically any price throughout the space (as an example, $f(X)$ is the altitude and we’re capable of uncover one for any degree on the airplane), then we’re capable of apply PSO. The PSO algorithm will return the parameter $X$ it found that produces the minimal $f(X)$.

Let’s start with the subsequent function

$$

f(x,y) = (x-3.14)^2 + (y-2.72)^2 + sin(3x+1.41) + sin(4y-1.73)

$$

Plot of f(x,y)

As we’re capable of see from the plot above, this function seems like a curved egg carton. It is simply not a convex function and subsequently it is onerous to hunt out its minimal on account of a native minimal found is simply not primarily the world minimal.

So how can we uncover the minimal degree on this function? For sure, we’re capable of resort to exhaustive search: If we confirm the price of $f(x,y)$ for every degree on the airplane, we’re capable of uncover the minimal degree. Or we’re capable of merely randomly uncover some sample elements on the airplane and see which one gives the underside price on $f(x,y)$ if we count on it is too pricey to look every degree. However, we moreover remember from the type of $f(x,y)$ that if now we now have found a level with a smaller price of $f(x,y)$, it is less complicated to hunt out a good smaller price spherical its proximity.

This is how a particle swarm optimization does. Similar to the flock of birds trying to find meals, we start with fairly just a few random elements on the airplane (identify them particles) and permit them to seek for the minimal degree in random directions. At each step, every particle ought to go looking throughout the minimal degree it ever found along with throughout the minimal degree found by your whole swarm of particles. After positive iterations, we take into consideration the minimal degree of the function as a result of the minimal degree ever explored by this swarm of particles.

Want to Get Started With Optimization Algorithms?

Take my free 7-day e mail crash course now (with sample code).

Click to sign-up and as well as get a free PDF Ebook mannequin of the course.

Algorithm Details

Assume now we now have $P$ particles and we denote the place of particle $i$ at iteration $t$ as $X^i(t)$, which throughout the occasion of above, now we now have it as a coordinate $X^i(t) = (x^i(t), y^i(t)).$ Besides the place, we actually have a velocity for each particle, denoted as $V^i(t)=(v_x^i(t), v_y^i(t))$. At the following iteration, the place of each particle might be updated as

$$

X^i(t+1) = X^i(t)+V^i(t+1)

$$

or, equivalently,

$$

begin{aligned}

x^i(t+1) &= x^i(t) + v_x^i(t+1)

y^i(t+1) &= y^i(t) + v_y^i(t+1)

end{aligned}

$$

and on the similar time, the velocities are moreover updated by the rule

$$

V^i(t+1) =

w V^i(t) + c_1r_1(pbest^i – X^i(t)) + c_2r_2(gbest – X^i(t))

$$

the place $r_1$ and $r_2$ are random numbers between 0 and 1, constants $w$, $c_1$, and $c_2$ are parameters to the PSO algorithm, and $pbest^i$ is the place that gives among the finest $f(X)$ price ever explored by particle $i$ and $gbest$ is that explored by all the particles throughout the swarm.

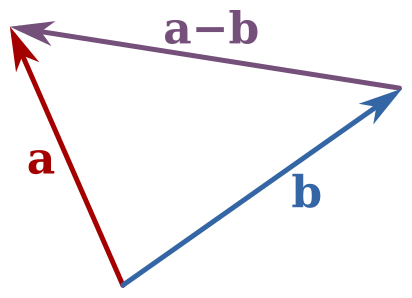

Note that $pbest^i$ and $X^i(t)$ are two place vectors and the excellence $pbest^i – X^i(t)$ is a vector subtraction. Adding this subtraction to the distinctive velocity $V^i(t)$ is to hold the particle once more to the place $pbest^i$. Similar are for the excellence $gbest – X^i(t)$.

We identify the parameter $w$ the inertia weight mounted. It is between 0 and 1 and determines how lots must the particle follow its earlier velocity (i.e., velocity and route of the search). The parameters $c_1$ and $c_2$ are often known as the cognitive and the social coefficients respectively. They controls how lots weight should be given between refining the search outcomes of the particle itself and recognizing the search outcomes of the swarm. We can take into consideration these parameters controls the commerce off between exploration and exploitation.

The positions $pbest^i$ and $gbest$ are updated in each iteration to reflect among the finest place ever found up to now.

One fascinating property of this algorithm that distinguishs it from totally different optimization algorithms is that it would not depend on the gradient of the goal function. In gradient descent, as an example, we seek for the minimal of a function $f(X)$ by shifting $X$ to the route of $-nabla f(X)$ as it is the place the function happening the quickest. For any particle on the place $X$ in the meanwhile, the best way it strikes would not depend on which route is the “down hill” nevertheless solely on the place are $pbest$ and $gbest$. This makes PSO notably acceptable if differentiating $f(X)$ is hard.

Another property of PSO is that it might be parallelized merely. As we’re manipulating plenty of particles to hunt out the optimum decision, each particles shall be updated in parallel and we solely wish to collect the updated price of $gbest$ as quickly as per iteration. This makes map-reduce construction a perfect candidate to implement PSO.

Implementation

Here we current how we’re capable of implement PSO to hunt out the optimum decision.

For the similar function as we confirmed above, we’re capable of first define it as a Python function and current it in a contour plot:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 | import numpy as np import matplotlib.pyplot as plt def f(x,y): “Objective function” return (x–3.14)**2 + (y–2.72)**2 + np.sin(3*x+1.41) + np.sin(4*y–1.73) # Contour plot: With the worldwide minimal confirmed as “X” on the plot x, y = np.array(np.meshgrid(np.linspace(0,5,100), np.linspace(0,5,100))) z = f(x, y) x_min = x.ravel()[z.argmin()] y_min = y.ravel()[z.argmin()] plt.decide(figsize=(8,6)) plt.imshow(z, extent=[0, 5, 0, 5], origin=‘lower’, cmap=‘viridis’, alpha=0.5) plt.colorbar() plt.plot([x_min], [y_min], marker=‘x’, markersize=5, color=“white”) contours = plt.contour(x, y, z, 10, colors=‘black’, alpha=0.4) plt.clabel(contours, inline=True, fontsize=8, fmt=“%.0f”) plt.current() |

Contour plot of f(x,y)

Here we plotted the function $f(x,y)$ throughout the space of $0le x,yle 5$. We can create 20 particles at random locations on this space, together with random velocities sampled over a standard distribution with suggest 0 and commonplace deviation 0.1, as follows:

1 2 3 | n_particles = 20 X = np.random.rand(2, n_particles) * 5 V = np.random.randn(2, n_particles) * 0.1 |

which we’re capable of current their place on the similar contour plot:

Initial place of particles

From this, we’re capable of already uncover the $gbest$ as among the finest place ever found by all the particles. Since the particles did not uncover the least bit, their current place is their $pbest^i$ as properly:

The vector pbest_obj is without doubt one of the finest price of the goal function found by each particle. Similarly, gbest_obj is without doubt one of the finest scalar price of the goal function ever found by the swarm. We are using min() and argmin() capabilities proper right here on account of we set it as a minimization downside. The place of gbest is marked as a star below

Position of gbest is marked as a star

Let’s set $c_1=c_2=0.1$ and $w=0.8$. Then we’re capable of change the positions and velocities based mostly on the elements we talked about above, after which change $pbest^i$ and $gbest$ afterwards:

1 2 3 4 5 6 7 8 9 10 11 | c1 = c2 = 0.1 w = 0.8 # One iteration r = np.random.rand(2) V = w * V + c1*r[0]*(pbest – X) + c2*r[1]*(gbest.reshape(–1,1)–X) X = X + V obj = f(X[0], X[1]) pbest[:, (pbest_obj >= obj)] = X[:, (pbest_obj >= obj)] pbest_obj = np.array([pbest_obj, obj]).max(axis=0) gbest = pbest[:, pbest_obj.argmin()] gbest_obj = pbest_obj.min() |

The following is the place after the first iteration. We mark among the finest place of each particle with a black dot to distinguish from their current place, which can be set in blue.

Positions of particles after one iteration

We can repeat the above code part for plenty of events and see how the particles uncover. This is the tip end result after the second iteration:

Positions of particles after two iterations

and that’s after the fifth iteration, remember that the place of $gbest$ as denoted by the star modified:

Positions of particles after 5 iterations

and after twentieth iteration, we already very close to the optimum:

Positions of particles after 20 iterations

This is the animation displaying how we uncover the optimum decision as a result of the algorithm progressed. See in case you may uncover some resemblance to the movement of a flock of birds:

Animation of particle actions

So how shut is our decision? In this express occasion, the worldwide minimal we found by exhaustive search is on the coordinate $(3.182,3.131)$ and the one found by PSO algorithm above is at $(3.185,3.130)$.

Variations

All PSO algorithms are principally the similar as we talked about above. In the above occasion, we set the PSO to run in a set number of iterations. It is trivial to set the number of iterations to run dynamically in response to the progress. For occasion, we’re capable of make it stop as quickly as we can’t see any change to the worldwide best decision $gbest$ in fairly just a few iterations.

Research on PSO have been completely on the suitable strategy to determine the hyperparameters $w$, $c_1$, and $c_2$ or numerous their values as a result of the algorithm progressed. For occasion, there are proposals making the inertia weight linear reducing. There are moreover proposals attempting to make the cognitive coefficient $c_1$ reducing whereas the social coefficient $c_2$ rising to hold further exploration initially and additional exploitation on the end. See, as an example, Shi and Eberhart (1998) and Eberhart and Shi (2000).

Complete Example

It should be easy to see how we’re capable of change the above code to resolve a greater dimensional purpose function, or switching from minimization to maximization. The following is the entire occasion of discovering the minimal degree of the function $f(x,y)$ proposed above, together with the code to generate the plot animation:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 | import numpy as np import matplotlib.pyplot as plt from matplotlib.animation import FuncAnimation def f(x,y): “Objective function” return (x–3.14)**2 + (y–2.72)**2 + np.sin(3*x+1.41) + np.sin(4*y–1.73) # Compute and plot the function in 3D inside [0,5]x[0,5] x, y = np.array(np.meshgrid(np.linspace(0,5,100), np.linspace(0,5,100))) z = f(x, y) # Find the worldwide minimal x_min = x.ravel()[z.argmin()] y_min = y.ravel()[z.argmin()] # Hyper-parameter of the algorithm c1 = c2 = 0.1 w = 0.8 # Create particles n_particles = 20 np.random.seed(100) X = np.random.rand(2, n_particles) * 5 V = np.random.randn(2, n_particles) * 0.1 # Initialize data pbest = X pbest_obj = f(X[0], X[1]) gbest = pbest[:, pbest_obj.argmin()] gbest_obj = pbest_obj.min() def change(): “Function to do one iteration of particle swarm optimization” world V, X, pbest, pbest_obj, gbest, gbest_obj # Update params r1, r2 = np.random.rand(2) V = w * V + c1*r1*(pbest – X) + c2*r2*(gbest.reshape(–1,1)–X) X = X + V obj = f(X[0], X[1]) pbest[:, (pbest_obj >= obj)] = X[:, (pbest_obj >= obj)] pbest_obj = np.array([pbest_obj, obj]).min(axis=0) gbest = pbest[:, pbest_obj.argmin()] gbest_obj = pbest_obj.min() # Set up base decide: The contour map fig, ax = plt.subplots(figsize=(8,6)) fig.set_tight_layout(True) img = ax.imshow(z, extent=[0, 5, 0, 5], origin=‘lower’, cmap=‘viridis’, alpha=0.5) fig.colorbar(img, ax=ax) ax.plot([x_min], [y_min], marker=‘x’, markersize=5, color=“white”) contours = ax.contour(x, y, z, 10, colors=‘black’, alpha=0.4) ax.clabel(contours, inline=True, fontsize=8, fmt=“%.0f”) pbest_plot = ax.scatter(pbest[0], pbest[1], marker=‘o’, color=‘black’, alpha=0.5) p_plot = ax.scatter(X[0], X[1], marker=‘o’, color=‘blue’, alpha=0.5) p_arrow = ax.quiver(X[0], X[1], V[0], V[1], color=‘blue’, width=0.005, angles=‘xy’, scale_units=‘xy’, scale=1) gbest_plot = plt.scatter([gbest[0]], [gbest[1]], marker=‘*’, s=100, color=‘black’, alpha=0.4) ax.set_xlim([0,5]) ax.set_ylim([0,5]) def animate(i): “Steps of PSO: algorithm change and current in plot” title = ‘Iteration {:02d}’.format(i) # Update params change() # Set picture ax.set_title(title) pbest_plot.set_offsets(pbest.T) p_plot.set_offsets(X.T) p_arrow.set_offsets(X.T) p_arrow.set_UVC(V[0], V[1]) gbest_plot.set_offsets(gbest.reshape(1,–1)) return ax, pbest_plot, p_plot, p_arrow, gbest_plot anim = FuncAnimation(fig, animate, frames=itemizing(fluctuate(1,50)), interval=500, blit=False, repeat=True) anim.save(“PSO.gif”, dpi=120, writer=“imagemagick”) print(“PSO found best decision at f({})={}”.format(gbest, gbest_obj)) print(“Global optimum at f({})={}”.format([x_min,y_min], f(x_min,y_min))) |

Further Reading

These are the distinctive papers that proposed the particle swarm optimization, and the early evaluation on refining its hyperparameters:

- Kennedy J. and Eberhart R. C. Particle swarm optimization. In Proceedings of the International Conference on Neural Networks; Institute of Electrical and Electronics Engineers. Vol. 4. 1995. pp. 1942–1948. DOI: 10.1109/ICNN.1995.488968

- Eberhart R. C. and Shi Y. Comparing inertia weights and constriction elements in particle swarm optimization. In Proceedings of the 2000 Congress on Evolutionary Computation (CEC ‘00). Vol. 1. 2000. pp. 84–88. DOI: 10.1109/CEC.2000.870279

- Shi Y. and Eberhart R. A modified particle swarm optimizer. In Proceedings of the IEEE International Conferences on Evolutionary Computation, 1998. pp. 69–73. DOI: 10.1109/ICEC.1998.699146

Summary

In this tutorial we realized:

- How particle swarm optimization works

- How to implement the PSO algorithm

- Some potential variations throughout the algorithm

As particle swarm optimization would not have a great deal of hyper-parameters and actually permissive on the goal function, it might be used to resolve a wide range of points.

Get a Handle on Modern Optimization Algorithms!

Develop Your Understanding of Optimization

…with just a few strains of python code

Discover how in my new Ebook:

Optimization for Machine Learning

It offers self-study tutorials with full working code on:

Gradient Descent, Genetic Algorithms, Hill Climbing, Curve Fitting, RMSProp, Adam,

and somewhat extra…

Bring Modern Optimization Algorithms to

Your Machine Learning Projects

See What’s Inside

3 Books on Optimization for Machine Learning

A Gentle Introduction to Stochastic Optimization Algorithms

How to Implement Bayesian Optimization from Scratch…

Local Optimization Versus Global Optimization

Why Optimization Is Important in Machine Learning

Combined Algorithm Selection and Hyperparameter…

- Get link

- X

- Other Apps

Comments

Post a Comment